7 Essential DevOps Tools Every Engineer Should Master

In the fast-paced world of software development, DevOps has emerged as a critical approach to streamline collaboration between development and operations teams, ultimately accelerating the delivery of high-quality software products. At the core of DevOps practices lie a plethora of tools designed to automate processes, enhance communication, and ensure seamless integration and deployment. For engineers diving into the realm of DevOps, mastering the right set of tools is paramount. Here, we explore 10 essential DevOps tools that every engineer should know.

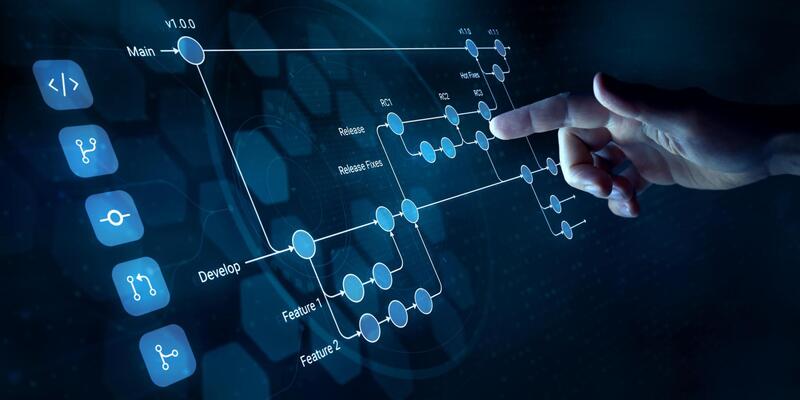

Git:

Git is a distributed version control system (DVCS) that has become ubiquitous in modern software development workflows. Originally created by Linus Torvalds in 2005 for managing the Linux kernel development, Git has since gained widespread adoption across various industries and projects of all sizes. Let's delve deeper into its features and importance in the DevOps landscape.

Distributed Version Control:

Git operates as a distributed version control system, meaning that each developer working on a project has a complete copy of the repository on their local machine. This allows for efficient collaboration, as developers can work offline, commit changes locally, and synchronize their work with the central repository when they're ready. It also provides redundancy and resilience, as every local repository serves as a complete backup of the project's history.

Branching and Merging:

One of Git's most powerful features is its branching model. Developers can create lightweight, isolated branches to work on new features, bug fixes, or experiments without affecting the main codebase. This promotes parallel development and enables teams to work on multiple features simultaneously. Git's robust merging capabilities allow developers to integrate changes from one branch to another seamlessly, facilitating collaboration and code review.

History and Versioning:

Git maintains a comprehensive history of all changes made to the project over time. Each commit in Git represents a snapshot of the project's state at a particular moment, along with metadata such as the author, timestamp, and commit message. This versioning capability enables developers to track changes, revert to previous versions if necessary, and understand the evolution of the codebase over time.

Collaboration and Code Review:

Git fosters collaboration among developers by providing mechanisms for sharing code and reviewing changes. Developers can push their local changes to a central repository, making them accessible to other team members. Code review workflows, facilitated by tools like pull requests (PRs) in platforms such as GitHub or GitLab, allow team members to discuss, review, and approve changes before they are merged into the main codebase. This promotes code quality, knowledge sharing, and accountability within the team.

Integration with CI/CD Pipelines:

Git seamlessly integrates with Continuous Integration/Continuous Delivery (CI/CD) pipelines, enabling automated testing, building, and deployment of software projects. CI/CD tools such as Jenkins, GitLab CI/CD, or GitHub Actions can trigger pipeline executions based on events in the Git repository, such as new commits or pull requests. This automation streamlines the software delivery process, reduces manual intervention, and ensures that changes are thoroughly tested before being deployed to production.

Open Source Ecosystem:

Git's open-source nature has led to a vibrant ecosystem of tools, extensions, and integrations that extend its functionality and adapt it to various development workflows. From graphical user interfaces (GUIs) for beginners to advanced command-line interfaces (CLIs) for power users, there are numerous Git clients available to suit different preferences and requirements. Additionally, Git integrates seamlessly with popular code hosting platforms like GitHub, GitLab, and Bitbucket, providing robust collaboration features and project management tools.

Jenkins

Jenkins is an open-source automation server widely used in the DevOps ecosystem for automating various aspects of the software development lifecycle, including building, testing, and deploying applications. Originally developed as Hudson in 2004, Jenkins has evolved into a mature and feature-rich tool with a vast ecosystem of plugins and integrations. Let's explore Jenkins in more detail:

Continuous Integration and Continuous Delivery (CI/CD):

At its core, Jenkins facilitates the implementation of CI/CD pipelines, which are essential practices in modern software development. Continuous Integration involves automatically building and testing code changes as they are committed to the version control repository. Jenkins enables developers to set up automated build jobs triggered by code commits, ensuring that the codebase remains in a functional state and detecting integration issues early in the development process.

Continuous Delivery extends CI by automating the deployment of validated code changes to testing or staging environments. Jenkins allows teams to define sophisticated deployment pipelines, incorporating steps such as code compilation, unit testing, integration testing, static code analysis, artifact generation, and deployment to various environments. With Jenkins pipelines, teams can automate the entire software delivery process, from code commit to production deployment, reducing manual intervention and ensuring consistency and reliability in deployments.

Plugin Ecosystem:

One of Jenkins' key strengths is its extensive plugin ecosystem, which provides a wide range of integrations with other tools, technologies, and services. Jenkins plugins extend its functionality to support diverse use cases and workflows, ranging from version control systems (e.g., Git, Subversion) and build tools (e.g., Maven, Gradle) to testing frameworks (e.g., JUnit, Selenium) and cloud platforms (e.g., AWS, Azure).

Developers can leverage Jenkins plugins to customize and extend their automation workflows, integrating with third-party services for notifications, reporting, artifact management, security scanning, and more. The plugin architecture allows Jenkins to adapt to different project requirements and toolchains, making it highly versatile and adaptable to various development environments.

Distributed Architecture:

Jenkins supports a distributed architecture, enabling organizations to scale their automation infrastructure to meet the demands of large and complex software projects. With Jenkins' master-slave architecture, multiple Jenkins agents (or slaves) can be connected to a central Jenkins master, allowing jobs to be distributed across multiple machines for parallel execution.

This distributed setup enhances performance, resource utilization, and resilience, as workload distribution can be optimized based on factors such as job type, hardware resources, and network proximity. Additionally, Jenkins supports cloud-based agents, enabling dynamic provisioning of build environments on cloud platforms such as AWS, Azure, or Google Cloud, further enhancing scalability and flexibility.

User Interface and Extensibility:

Jenkins provides a user-friendly web-based interface for configuring and managing automation pipelines, job schedules, build histories, and system settings. The intuitive interface makes it easy for users to create and customize automation workflows, define build triggers, set up notifications, and monitor job execution status.

Moreover, Jenkins offers robust APIs and scripting capabilities, allowing developers to automate Jenkins configuration and interact with Jenkins programmatically. Jenkins Pipeline, introduced as code-as-Pipeline, enables teams to define their automation pipelines using a domain-specific language (DSL) or as code stored in version control repositories. This approach promotes collaboration, versioning, and reproducibility of automation workflows, aligning with DevOps principles of infrastructure as code (IaC) and version-controlled automation.

Dockers

Docker has revolutionized the way software is developed, shipped, and deployed by popularizing containerization technology. Docker containers encapsulate applications and their dependencies in a portable and consistent environment, enabling developers to build, ship, and run applications reliably across different computing environments. Let's delve into Docker and its significance within the DevOps landscape:

Containerization Technology:

Docker utilizes containerization technology to package applications and their dependencies into lightweight, standalone containers. Containers isolate applications from the underlying infrastructure, ensuring consistency and portability across different environments, such as development laptops, on-premises servers, or cloud platforms.

By leveraging containerization, Docker eliminates the "it works on my machine" problem commonly encountered in software development. Developers can package their applications, along with all required libraries and dependencies, into a Docker container, ensuring that the application runs consistently regardless of the environment in which it is deployed.

Standardization and Reproducibility:

Docker containers provide a standardized runtime environment, enabling developers to build, ship, and deploy applications consistently. With Docker, developers can specify the exact dependencies and configuration required for their applications, ensuring reproducibility across different stages of the software development lifecycle.

Docker images, which serve as the blueprints for containers, encapsulate the application code, runtime, libraries, and dependencies in a portable and immutable format. These images can be versioned, shared, and distributed through Docker registries, facilitating collaboration and enabling teams to deploy applications with confidence.

Microservices Architecture:

Docker plays a pivotal role in enabling microservices architecture, a design approach where applications are composed of small, independently deployable services. Each microservice runs in its own container, allowing teams to develop, deploy, and scale services independently of each other.

By containerizing microservices, Docker facilitates agility, scalability, and fault isolation within distributed systems. Developers can leverage Docker Compose or container orchestration platforms like Kubernetes to manage multi-container applications, define service dependencies, and automate deployment workflows.

DevOps Integration:

Docker is closely intertwined with DevOps practices, facilitating automation, collaboration, and continuous delivery in software development workflows. Docker containers serve as the building blocks for CI/CD pipelines, allowing developers to package applications into containers, run automated tests, and deploy artifacts to production environments seamlessly.

Docker integrates seamlessly with CI/CD tools like Jenkins, GitLab CI/CD, and CircleCI, enabling organizations to automate build, test, and deployment processes. By incorporating Docker into CI/CD pipelines, teams can achieve faster release cycles, improve code quality, and accelerate time-to-market for software products.

Cloud-Native Ecosystem:

Docker has become foundational to the cloud-native ecosystem, alongside technologies like Kubernetes, Prometheus, and Envoy. Cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer managed container services based on Docker, such as Amazon Elastic Container Service (ECS), Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE).

These managed container services abstract the underlying infrastructure complexity and provide scalable, highly available platforms for running Dockerized applications. Organizations can leverage Docker containers and cloud-native services to build resilient, cloud-native applications that can dynamically scale to meet fluctuating demand and optimize resource utilization.

Kubernetes

Kubernetes

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. Originally developed by Google and later donated to the Cloud Native Computing Foundation (CNCF), Kubernetes has rapidly gained widespread adoption as the de facto standard for container orchestration in the cloud-native ecosystem. Let's explore Kubernetes in more detail:

Container Orchestration:

Kubernetes simplifies the management of containerized applications by abstracting away the complexities of infrastructure provisioning, load balancing, scaling, and networking. With Kubernetes, developers can focus on defining their application's desired state, while Kubernetes takes care of ensuring that the actual state matches the desired state.

Kubernetes orchestrates containers across a cluster of nodes, providing mechanisms for deploying, scaling, updating, and monitoring applications running inside containers. By automating repetitive tasks and providing declarative APIs, Kubernetes enables organizations to achieve greater efficiency, reliability, and scalability in deploying and managing modern, cloud-native applications.

Key Concepts:

Kubernetes introduces several key concepts that form the foundation of its architecture:

Pods: Pods are the smallest deployable units in Kubernetes, comprising one or more containers that share the same network namespace and storage volumes. Pods represent a logical application workload and are scheduled onto nodes by the Kubernetes scheduler.

Deployments: Deployments provide declarative configuration for managing replica sets of pods. Deployments enable rolling updates, scaling, and self-healing capabilities for applications, ensuring that the desired number of pods are running at all times.

Services: Services provide stable endpoints for accessing pods running in a Kubernetes cluster. Services enable load balancing, service discovery, and internal communication between different components of a distributed application.

Nodes: Nodes are individual machines (virtual or physical) that form the Kubernetes cluster. Each node runs the Kubernetes runtime environment (kubelet) and container runtime (e.g., Docker, containerd), allowing it to host and manage containers.

Ingress: Ingress is a Kubernetes resource that manages external access to services within a cluster. Ingress controllers provide HTTP and HTTPS routing, load balancing, and SSL termination for incoming traffic to Kubernetes services.

Scalability and Resilience:

Kubernetes is designed to scale applications dynamically based on demand, allowing organizations to handle fluctuations in workload efficiently. Kubernetes provides horizontal scaling through replica sets and auto-scaling based on CPU or custom metrics, enabling applications to scale up or down based on resource utilization.

Furthermore, Kubernetes enhances application resilience by automatically recovering from failures and ensuring high availability. Kubernetes supports features such as pod health checks, automatic pod restarts, rolling updates, and self-healing capabilities, enabling applications to maintain availability and reliability even in the face of node failures or other disruptions.

Ecosystem and Extensibility:

Kubernetes boasts a rich ecosystem of tools, extensions, and integrations that extend its functionality and cater to diverse use cases. The Kubernetes ecosystem includes Helm charts for packaging and deploying applications, Prometheus for monitoring and alerting, Grafana for visualization, Fluentd for logging, and Istio for service mesh capabilities, among others.

Kubernetes also provides extensive support for custom resource definitions (CRDs) and custom controllers, allowing organizations to extend Kubernetes' capabilities and define custom resources tailored to their specific requirements. This extensibility enables organizations to build and operate complex, cloud-native applications effectively within the Kubernetes ecosystem.

Cloud-Native Adoption:

Kubernetes has become the cornerstone of cloud-native adoption, with major cloud providers offering managed Kubernetes services such as Amazon Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE). These managed Kubernetes services abstract away the operational overhead of managing Kubernetes clusters, allowing organizations to focus on building and deploying applications.

Moreover, Kubernetes enables organizations to embrace hybrid and multi-cloud strategies by providing a consistent platform for deploying and managing applications across on-premises data centers and multiple cloud environments. This portability and interoperability make Kubernetes an ideal choice for organizations seeking flexibility and agility in their cloud-native journey.

Terraform

Terraform is an open-source infrastructure as code (IaC) tool developed by HashiCorp. It enables users to define and provision infrastructure resources using a declarative configuration language. Terraform simplifies the management of cloud infrastructure by treating infrastructure as code, allowing developers and operators to define, version, and automate the provisioning of resources across various cloud providers and services. Let's explore Terraform in more detail:

Declarative Configuration Language:

Terraform uses a declarative configuration language to define infrastructure resources and their desired state. Users describe the desired configuration of their infrastructure using Terraform configuration files written in HashiCorp Configuration Language (HCL) or JSON. The configuration files specify the desired resources, their attributes, dependencies, and any other necessary configurations.

By adopting a declarative approach, Terraform enables users to focus on specifying the desired end-state of the infrastructure, rather than the procedural steps required to achieve it. Terraform handles the orchestration of resource creation, updating, and destruction based on the desired configuration, ensuring consistency and reproducibility across different environments.

Infrastructure as Code:

Terraform promotes the principles of infrastructure as code (IaC), allowing users to manage infrastructure configurations in a manner similar to application code. Infrastructure configurations are versioned, stored in source control repositories, and treated as code artifacts, enabling collaboration, code review, and auditability.

By treating infrastructure as code, Terraform enables organizations to adopt software engineering best practices such as version control, automated testing, and continuous integration/continuous delivery (CI/CD) for infrastructure provisioning workflows. This approach enhances agility, reduces manual errors, and facilitates the automation of infrastructure management tasks.

Multi-Cloud and Hybrid Cloud Support:

Terraform provides support for multiple cloud providers, including Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and others. This multi-cloud support allows users to provision and manage infrastructure resources across different cloud providers using a unified workflow.

Moreover, Terraform supports hybrid cloud environments, enabling users to provision resources across both on-premises data centers and public cloud environments. By providing a consistent workflow for managing infrastructure across diverse environments, Terraform simplifies the complexity of hybrid and multi-cloud architectures.

Resource Dependency Management:

Terraform automatically manages resource dependencies and orchestrates the provisioning and destruction of resources in the correct order. When defining infrastructure configurations, users can specify dependencies between resources, such as network dependencies, security group rules, or load balancer dependencies.

Terraform analyzes these dependencies and creates an execution plan that determines the optimal sequence for provisioning and updating resources while respecting their dependencies. This dependency management ensures that resources are provisioned and configured correctly, reducing the risk of misconfiguration and ensuring the integrity of the infrastructure.

Extensibility and Ecosystem:

Terraform boasts a rich ecosystem of providers, modules, and extensions that extend its functionality and cater to various use cases. Providers are plugins that extend Terraform's capabilities to interact with different cloud providers, services, and APIs. Users can leverage provider plugins to provision resources ranging from virtual machines and storage to databases and serverless functions.

Modules are reusable, shareable units of Terraform configurations that encapsulate infrastructure components and configurations. Users can create, share, and consume modules to encapsulate best practices, standardize configurations, and promote code reuse across different projects and teams.

Additionally, Terraform offers integration with other tools and technologies, such as version control systems (e.g., Git), CI/CD pipelines (e.g., Jenkins, GitLab CI/CD), and configuration management tools (e.g., Ansible, Chef, Puppet). These integrations enable seamless workflows for managing infrastructure as part of broader DevOps practices.

GitLab

GitLab

GitLab is a complete DevOps platform delivered as a single application that covers the entire software development lifecycle. It offers a comprehensive set of features for source code management, continuous integration and continuous deployment (CI/CD), collaboration, monitoring, and security. Originally created as an open-source project, GitLab has evolved into a robust solution used by organizations of all sizes, ranging from small teams to large enterprises. Let's delve into GitLab's key features and capabilities:

Source Code Management:

GitLab provides powerful version control capabilities with Git repositories at its core. Teams can host and manage their code repositories, collaborate on code changes, and track revisions using GitLab's intuitive web interface or Git command-line interface (CLI). GitLab's branching and merging workflows support Git's native features, enabling teams to collaborate effectively on codebases of any size.

Continuous Integration and Continuous Deployment (CI/CD):

GitLab includes built-in CI/CD capabilities that enable teams to automate the software delivery pipeline from code commit to production deployment. Users can define CI/CD pipelines using YAML configuration files stored in the repository, allowing for version-controlled, reproducible build and deployment configurations. GitLab Runners execute pipeline jobs on designated infrastructure, enabling parallel and distributed builds for faster feedback cycles.

Collaboration and Project Management:

GitLab provides robust collaboration features to streamline teamwork and communication within development teams. Project management tools such as issue tracking, kanban boards, and milestones allow teams to plan, prioritize, and track progress on tasks and deliverables. Additionally, merge requests (MRs) facilitate code reviews, discussions, and feedback loops, promoting code quality and knowledge sharing among team members.

Monitoring and Performance:

GitLab includes built-in monitoring and performance tools that provide visibility into the health and performance of applications and infrastructure. The Prometheus integration enables teams to collect, store, and visualize time-series metrics, while Grafana dashboards offer customizable views of application performance and system metrics. Monitoring capabilities help teams identify bottlenecks, diagnose issues, and optimize application performance effectively.

Security and Compliance:

GitLab prioritizes security and compliance by offering a range of features to identify, remediate, and prevent security vulnerabilities and compliance violations. Static application security testing (SAST), dynamic application security testing (DAST), container scanning, and dependency scanning help teams identify security vulnerabilities in code, dependencies, and container images early in the development process. Additionally, GitLab's compliance management features assist organizations in meeting regulatory requirements and industry standards.

Scalability and Extensibility:

GitLab is highly scalable and adaptable to organizations of all sizes and complexities. It offers self-managed and cloud-hosted deployment options to accommodate different infrastructure requirements and deployment preferences. GitLab's extensive API and webhook support enable integration with third-party tools and services, allowing organizations to extend GitLab's functionality and integrate it seamlessly into existing workflows and toolchains.

Community and Support:

GitLab has a vibrant and active community of users, contributors, and advocates who contribute to its development, documentation, and ecosystem. The GitLab Community Edition (CE) is open-source and free to use, providing access to essential features for source code management and collaboration. GitLab Enterprise Edition (EE) offers additional features and support options tailored to the needs of enterprise customers, including advanced security, compliance, and scalability capabilities.

AWS/Azure/GCP (Cloud Providers)

Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are three of the leading cloud service providers, offering a wide range of infrastructure and platform services to support diverse workloads and use cases. Let's explore each of these cloud providers in more detail:

Amazon Web Services (AWS):

AWS is the largest and most widely adopted cloud platform, offering a comprehensive suite of cloud services across compute, storage, databases, networking, machine learning, analytics, security, and more. Some key AWS services include:

Amazon EC2: Elastic Compute Cloud (EC2) provides scalable virtual servers (instances) on-demand, allowing users to deploy and manage applications in the cloud.

Amazon S3: Simple Storage Service (S3) offers scalable object storage for storing and retrieving data, with high availability, durability, and security features.

Amazon RDS: Relational Database Service (RDS) provides managed database services for popular database engines such as MySQL, PostgreSQL, Oracle, SQL Server, and Amazon Aurora.

AWS Lambda: Lambda enables serverless computing, allowing users to run code in response to events without provisioning or managing servers.

Amazon ECS/EKS: Amazon Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS) provide container orchestration platforms for deploying and managing containerized applications at scale.

Amazon VPC: Virtual Private Cloud (VPC) enables users to provision isolated sections of the AWS cloud, including virtual networks, subnets, route tables, and security groups.

AWS offers a global network of data centers (regions and availability zones) to ensure low latency, high availability, and compliance with data residency requirements. Additionally, AWS provides a rich ecosystem of services, tools, and partner integrations to support diverse use cases, from web hosting and mobile app development to big data analytics and artificial intelligence.

Microsoft Azure:

Azure is Microsoft's cloud computing platform, offering a comprehensive set of services and tools for building, deploying, and managing applications and services. Azure services span compute, storage, databases, networking, AI, IoT, DevOps, security, and more. Key Azure services include:

Azure Virtual Machines: Similar to AWS EC2, Azure VMs provide scalable virtualized compute resources for running applications and workloads in the cloud.

Azure Blob Storage: Blob Storage offers scalable object storage for unstructured data, with features such as tiered storage, lifecycle management, and encryption.

Azure SQL Database: Azure SQL Database provides managed relational database services with built-in intelligence, high availability, and scalability.

Azure Functions: Functions is Microsoft's serverless computing offering, allowing users to run event-driven code without managing infrastructure.

Azure Kubernetes Service (AKS): AKS offers managed Kubernetes container orchestration for deploying, managing, and scaling containerized applications.

Azure Active Directory (AD): Azure AD provides identity and access management services, enabling single sign-on, multi-factor authentication, and user lifecycle management.

Azure emphasizes integration with Microsoft's ecosystem of products and services, including Windows Server, SQL Server, Office 365, and Visual Studio. Azure also offers hybrid cloud solutions for seamlessly extending on-premises data centers to the cloud, as well as edge computing services for processing data closer to the source.

Google Cloud Platform (GCP):

Google Cloud Platform (GCP):

GCP is Google's cloud computing platform, offering a broad range of services for infrastructure, data analytics, machine learning, AI, and application development. Key GCP services include:

Google Compute Engine: Compute Engine provides scalable virtual machines for running applications and workloads in Google's infrastructure.

Google Cloud Storage: Cloud Storage offers scalable object storage with features such as multi-regional replication, versioning, and lifecycle management.

Google Cloud SQL: Cloud SQL provides managed database services for MySQL, PostgreSQL, and SQL Server, with features such as automated backups, replication, and scalability.

Google Cloud Functions: Cloud Functions offers serverless computing, allowing users to run event-driven code in response to events from Google Cloud services.

Google Kubernetes Engine (GKE): GKE provides managed Kubernetes container orchestration for deploying, managing, and scaling containerized applications.

Google Cloud Identity and Access Management (IAM): IAM provides centralized access control and security policies for managing user access to Google Cloud resources.

GCP differentiates itself with its strengths in data analytics, machine learning, and AI, leveraging Google's expertise in these areas. GCP offers services such as BigQuery for big data analytics, TensorFlow for machine learning, and AI Platform for building and deploying machine learning models at scale.

Common Characteristics:

While AWS, Azure, and GCP offer unique features and capabilities, they share several common characteristics:

Scalability: All three cloud providers offer scalable infrastructure resources that can be provisioned and scaled up or down based on demand.

Reliability: AWS, Azure, and GCP operate global networks of data centers with redundant infrastructure and high availability features to ensure reliability and uptime.

Security: Security is a top priority for all cloud providers, with features such as encryption, identity and access management (IAM), network security, and compliance certifications to protect data and resources.

Flexibility: AWS, Azure, and GCP support a wide range of programming languages, frameworks, operating systems, and third-party integrations, providing flexibility for diverse workloads and use cases.

Pay-as-You-Go Pricing: Cloud services are typically billed on a pay-as-you-go or consumption-based pricing model, allowing users to pay only for the resources they use without upfront capital expenditures.

Conclusion

In conclusion, mastering these essential DevOps tools empowers engineers to embrace automation, collaboration, and agility in their software development practices. By leveraging the capabilities of these tools, DevOps teams can accelerate the delivery of high-quality software, improve operational efficiency, and drive innovation in today's competitive landscape. As technology continues to evolve, staying abreast of emerging tools and best practices will be crucial for engineers striving to excel in the dynamic field of DevOps.

Newsletter to recieve

our latest company updates

Comment